Why?

I love colours, I love using colours even more. Unfortunately, I have to admit that I don't understand colours well enough to use them properly. It is the same frustration that I had about one year ago when I first realised that I couldn't plot anything better than the defaults in Excel and Matlab! It was for that very reason, I decided to find a solution and eventually learned R. Still learning it today.

What's wrong with my previous attempts to use colours? Let's look at

CrimeMap. The colour choices, when I first created the heatmaps, were entirely based on personal experience. In order to represent danger, I always think of

yellow (warning) and

red (something just got real). This combination eventually became the default settings.

"Does it mean the same thing when others look at it?"

This question has been bugging me since then. As a temporary solution for CrimeMap, I included controls for users to define their own colour scheme. Below are some examples of crime heatmaps that you can create with CrimeMap.

Personally, I really like this feature. I even marketed this as "

highly flexible and customisable - colour it the way you like it!" ... I remember saying something like that during

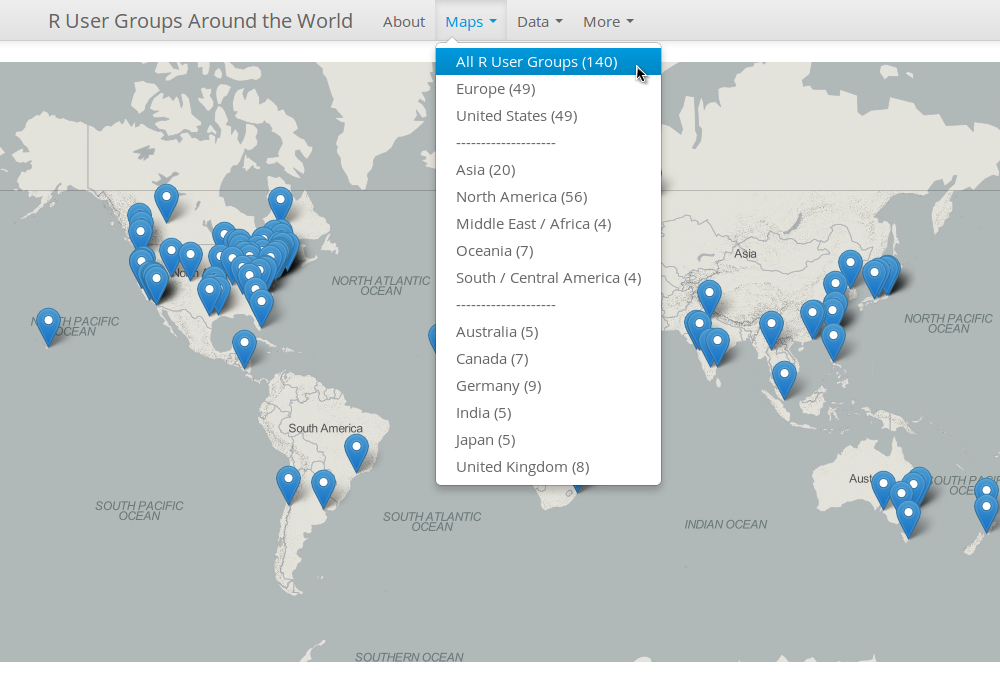

LondonR (and I will probably repeat this during

useR later).

Then again, the more colours I can use, the more doubts I have with the default Yellow-Red colour scheme. What do others see in those colours?

I need to improve on this! In reality, you have one chance, maybe just a few seconds, to tell your very important key messages and to get attention. You can't ask others to tweak the colours of your data visualisation until they get what it means.

Therefore, I know another learning-by-doing journey is required to better understand the use of colours. Only this time, I already have about a year of experience with R under my belt, I decided to capture all the references, thinking and code in one R package.

Existing Tools

Given my poor background in colours, a bit of research on what's available is needed. So far I have found the following. Please suggest other options if you think I should be made aware of (thanks!). I am sure this list will grow as I continue to explore more options.

Online Palette Generator with API

Key R Packages

- RColorBrewer by Erich Neuwirth - been using this since very first days

- colorRamps by Tim Keitt - another package that I have been using for a long time

- colorspace by Ross Ihaka et al. - important package for HCL colours

- colortools by Gaston Sanchez - for HSV colours

- munsell by Charlotte Wickham - very useful for exploring and using Munsell colour systems

Funky R Packages and Posts:

Other Languages:

The Plan

"

In order to learning something new, find an interesting problem and dive into it!" - This is roughly what

Sebastian Thrun said during "

Introduction to A.I.", the very first MOOC I participated. It has a really deep impact on me and it has been my motto since then. Fun is key. This project is no exception but I do intend to achieve a bit more this time. Algorithmically, the goal of this mini project can be represented as code below:

> is.fun("my.colours") & is.informative("my.colours")

> TRUE

Seriously speaking, based on the tools and packages mentioned above, I would like to develop a new R package that does the following five tasks. Effectively, these should translate into five key functions (plus a sixth one as a wrapper that goes through all steps in one go).

- Extracting colours from images (local or online).

- Selecting and (adjusting if needed) colours with web design and colour blindness in mind.

- Arranging colours based on colour theory.

- Evaluating the aesthetic of a palette systematically (quantifying beauty).

- Sharing the palette with friends easily (think the publish( ) and load_gist( ) functions in Shiny, rCharts etc).

I decided to start experimenting with colourful movie posters, especially those from

Quentin Tarantino. I love his movies but I also understand that those movies might be offensive to some. That is not my intention here as I just want to bring out the colours. If these examples somehow offend you, please accept my apologies in advance.

First function - rPlotter :: extract_colours( )

The first step is to extract colours from an image. This function is based on

dsparks' k-means palettle gist. I modified it slightly to include the excellent

EBImage package for easy image processing. For now, I am including this function with my

rPlotter package (a package with functions that make plotting in R easier - still in early development).

Note that this is the very first step of the whole process. This function ONLY extracts colours and then returns the colours in simple alphabetical order (of the hex code).

The following examples further illustrate why a simple extraction alone is not good enough.

Example One - R Logo

Let's start with the classic R logo.

So three-colour palette looks OK. The colours are less distinctive when we have five colours. For the seven-colour palette, I cannot tell the difference between colours (3) and (5). This example shows that additional processing is needed to rearrange and adjust the colours, especially when you're trying to create a many-colour palette for proper web design and publication.

Example Two - Kill Bill

What does Quentin_Tarantino see in Yellow and Red?

Actually the results are not too bad (at least I can tell the differences).

Example Three - Palette Tarantino

OK, how about a palette set based on some of his movies?

I know more work is needed but for now I am quite happy playing with this.

Example Four - Palette Simpsons

Don't ask why, ask why not ...

I am loving it!

Going Forward

So the above examples show my initial experiments with colours. It will be, to me, a very interesting and useful project in long-term. I look forward to making some sports related data viz when the package reaches a stable version.

The next function in development will be

"select_colours()". This will be based on further study on colour theory and other factors like colour blindness. I hope to develop a function that automatically picks the best possible combination of original colours (or adjusts them slightly only if necessary). Once developed, a blog post will follow. Please feel free to fork

rPlotter and suggest new functions.

useR! 2014

If you're going to

useR! this year, please do come and say hi during the poster session. I will be presenting

a poster on the crime maps projects. We can have a chat on

CrimeMap,

rCrimemap, this colour palette project or any interesting open-source projects.

Acknowledgement

I would like to thank

Karthik Ram for developing and sharing the

wesanderson package in the first place. I asked him if I could add some more colours to it and he came back with some suggestions. The conversation was followed by some more interesting

tweets from

Russell Dinnage and

Noam Ross. Thank you all!

I would also like to thank

Roland Kuhn for showing how to

embed individual files of a gist. This is the first time I embed code here properly.

Tweets are the easiest way for me to discuss R these days. Any feedback or suggestion,